Thanks to new technology, 2022 has seen a flurry of artificial imagery.

Whether Kermit in Blade Runner, a confused grizzly bear or Walter White as a muppet, the latest tech has a remarkable knack for creating artistic, lifelike images.

What Is Artificial Intelligence?

Artificial intelligence is a branch of computer science concerned with building smart machines that can perform tasks typically requiring human intelligence.

The developments are rapid; possibilities unimaginable. With each change, an assortment of new toys emerges, with a procession of rabid consumers ready to take the plunge.

The process is simple. The AI is a text-to-image generator, meaning the user inputs a prompt, and the AI visualizes it. For instance, this image used the prompt: Cat bunny. Simple enough.

With the rise of Midjourney and DALL-E, we have to wonder, how does AI do it? What goes into the technical and creative choices behind each creation? And most importantly, can we try it?

How Does AI Image Generating Work?

Imagine the difference between a sandwich and a pineapple. While the visual distinction may be clear to you, it’s a trickier task for a computer.

For image-gen, researchers feed machines millions upon millions of images. They annotate the data sets, so the machine has a text reference, and the device is tweaked and calibrated until it can recognize the photos. Thus, it begins to parse pineapples from sandwiches and can start to make its own.

These new tools are mind-boggling. And public. On September 28th, OPEN AI made a staggering announcement: DALLE-2 is open and accessible to all, with subscription options for continued use, of course.

This year alone has seen the rise of DALL-E 2, Midjourney, Craiyon and the often controversial Stable Diffusion. Each develops staggering (often unbelievable) images.

Good news: If you’re looking for a bit of experimentation, these programs have free trials. Once you run out, you’ll have to subscribe to Midjourney or buy into DALL-E’s token system.

How to Use Midjourney

Midjourney’s image generator runs through the ever-popular Discord.

For some, this spurs the reaction: How do I use that? For others: It’s that easy?

Once you join the official server, you’ll have to navigate to Midjourney’s newbie server. The space can be overwhelming initially, as a spree of new users generate anything they can.

Luckily, you can, too. From here, the process is as simple as typing, with imagination to boot.

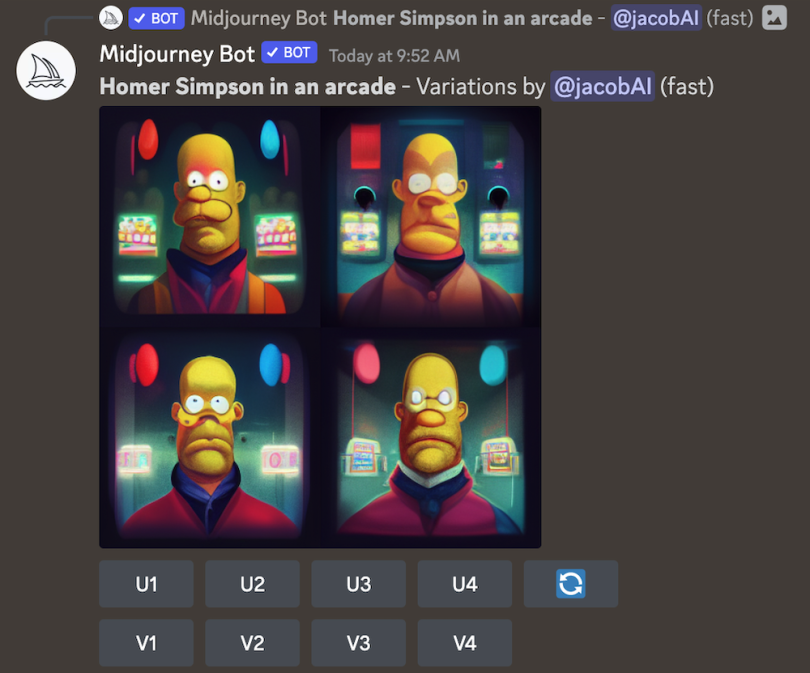

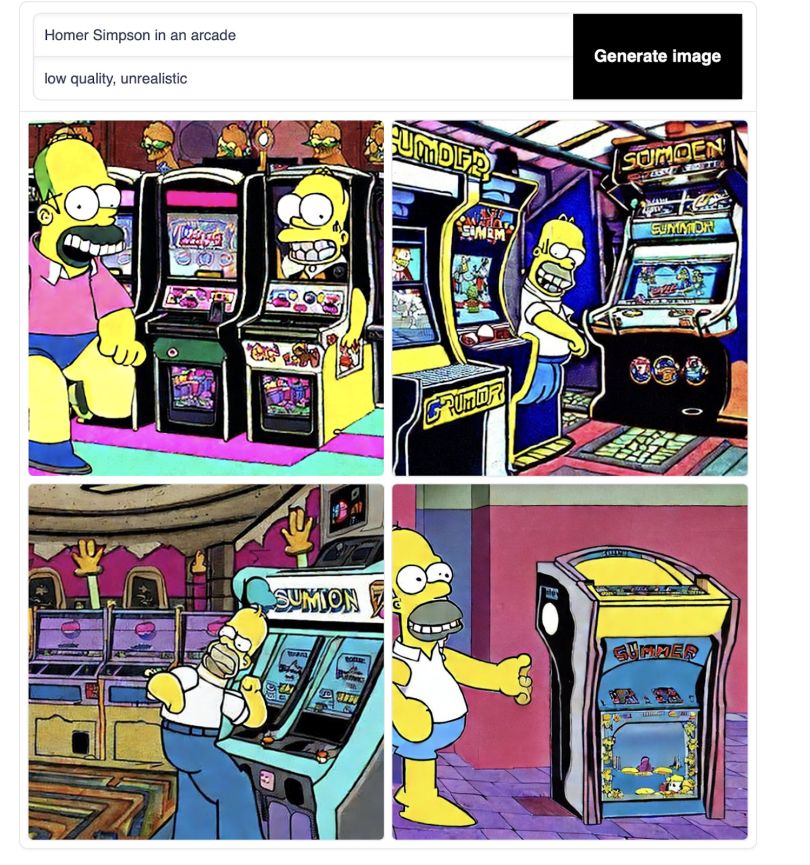

Type “/imagine” in the chat bar and enter your prompt– this cue tells the server you’re looking for an image. For our purposes, let’s try: “Homer Simpson in an arcade.”

“/imagine Homer Simpson in an arcade.”

As soon as you hit enter, Midjourney begins to generate. Unlike other AI generators, you watch Midjourney develop in real-time, likely around 30 seconds.

On the first go, the results can be confusing. Midjourney gives you a grid of images with several commands beneath it.

U means “upscale,” and V means “Variation.” The number corresponds clockwise. The top left is U1, and the bottom right is U4.

If you like an image and want it to see HD, you can choose the corresponding U to add an increased definition. If you like the idea of an image, but want to see what else it can do, click the corresponding V. Midjourney will create four different images with the same baseline.

Clicking V4 presents me with four new options:

Notice: Midjourney’s generations are not as lifelike as DALL-E’s. While you can tailor the input with specific words, for instance “photorealistic,” “35mm” or “HDR,” I’ve found Midjourney much better suited for art.

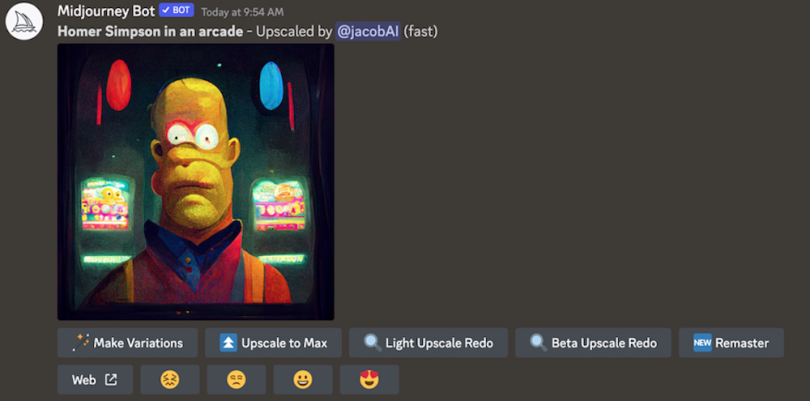

If I like the top left, I select “U1” to upscale.

Again, I can make variations. However, if I like the image, I can “Upscale to Max” to make it as HD as possible. This is the final, high-quality result:

Don’t be discouraged by Midjourney’s less-than-photorealistic results. While this program may not be as poised for visual trickery, it generates lovely, intriguing artwork.

How to Use DALL-E

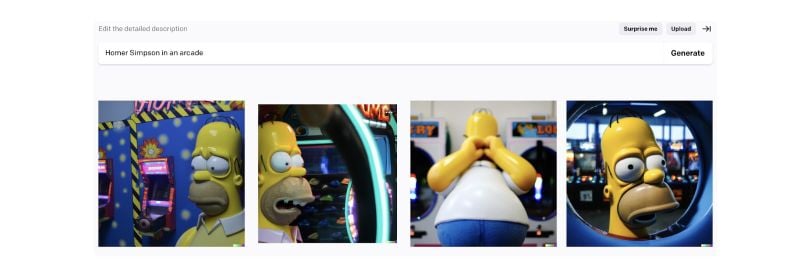

DALL-E is the king of realism and simplicity. This is the program you boot up with your friends on Friday night to show them what AI can do. The results are often less cerebral and artistic and more outright fun.

The process itself is quite simple. You’ll arrive at the image-generation bar when you create an account for Open AI.

From there, all you have to do is type. If you can’t think of anything, click Surprise Me.

Let’s try our old prompt: “Homer Simpson in an arcade.” Generate. Rather than watching the images form, you’ll track a loading bar, with some reference images below. Within 30 seconds, you’ll have your output.

The results are typically shocking. After the first bout of: How does it do that? You may wonder: What else can it do?

By inputting the same prompt, you can see the limitless possibilities.

The words will often be jumbled, the figures demented, but it’s a small price to pay for images of this quality.

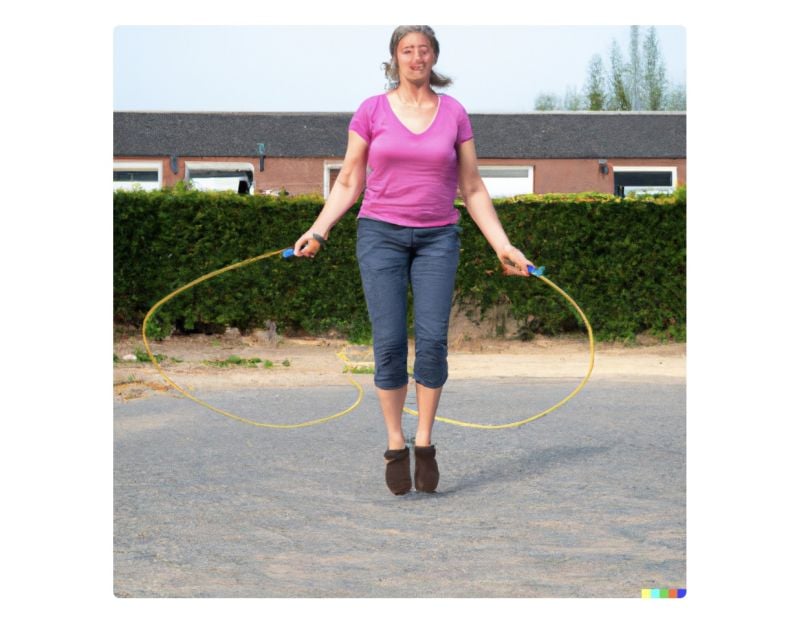

It’s important to note that DALL-E does much better with characters than humans. The program recognizes pop culture icons better and seemingly struggles to create lifelike figures with clear faces.

For instance, the prompt: “A fifty-year-old plays double dutch.”

Disturbing, I know.

How to Use Stable Diffusion

Stable Diffusion is another simple process — with a twist. Your prompt is what you want to see in the image, while your negative prompt is what you don’t want to see. If I want a seedy night image, I’ll try “Daylight” as a negative prompt. The loading screen creates output in a similar time frame.

Ugly, right?

Still, we have means of getting it where we want. This is where the negative space comes in. Below the generation stage, you can filter through Diffusion’s successful example prompts.

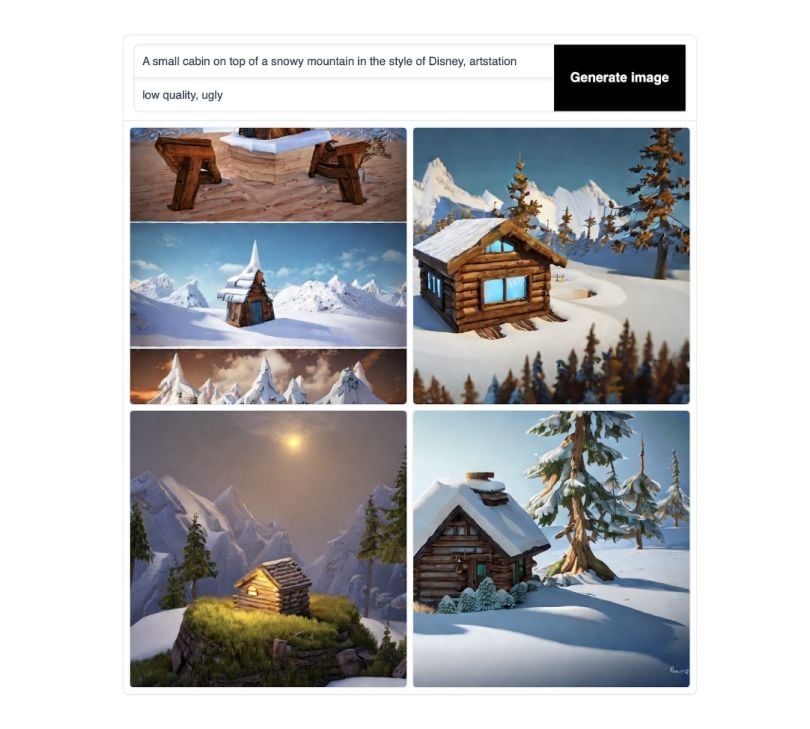

In the following example prompt, they wrote: “A small cabin on top of a snowy mountain in the style of Disney, artstation” with the negative prompt: “low quality, ugly.”

By telling Stable not to be low quality or ugly, the program created a neat, beautiful image. Let’s try the same negative prompt on ours.

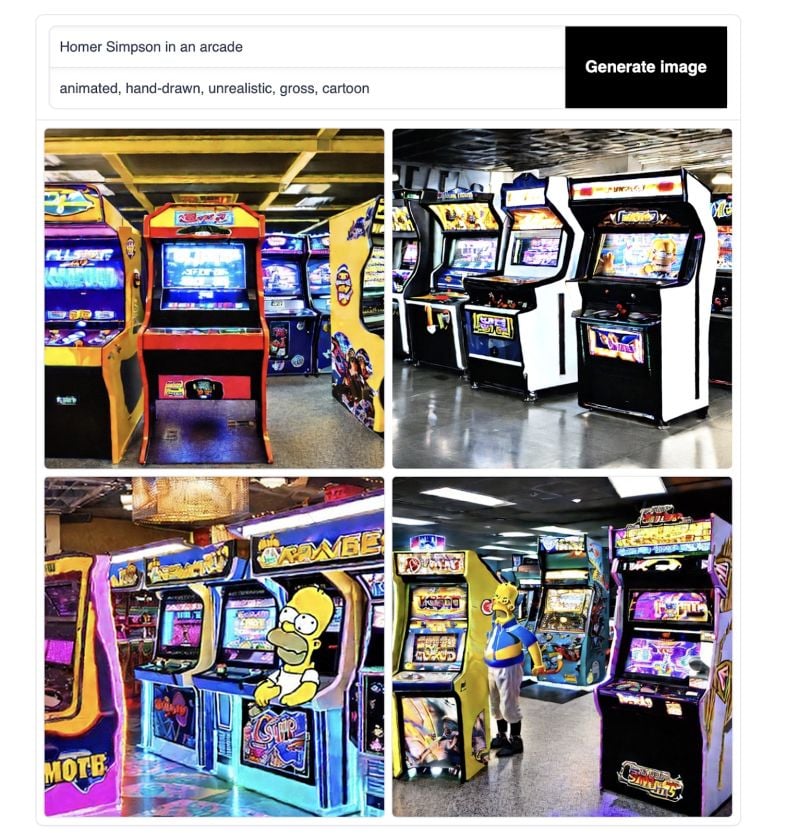

A bit better, but still not great. Let’s try to get out of the animated realm.

In the negative space, I inputted: “animated” “hand-drawn” “unrealistic” “cartoon.” Ideally, we can eliminate the ugly, early 2000s Cartoon Network look. I’d like a bit more artistry to it, like the Midjourney or DALL-E results.

A bit better. Like Midjourney and Dall-E, you’ll have to learn the program’s language. No matter which software you choose, their communities offer a vast wealth of knowledge and tips.

Analyzing the Results

Clearly, the AI isn’t shooting baskets every time. The generations took me more than an hour, and I still may not have the images I want.

No matter what image you find, the human must sort through the output. While the prompting can become more specific and coded (guided toward lens sizes and aspect ratios) and each program has a community of users willing to help, there are natural limitations.

As a whole, the images could not exist without human direction. The human must have the vision, the idea, and the taste to know what works and doesn’t. In this way, the tool is still a tool, and the human is still the shaper, the tastemaker.

AI-Generated Art: An Explosion of Creativity

It’s difficult to deny the artistry of what AI is doing. AI is pulling on a reservoir of great artists and iconic works, referencing them as an artist does, but maddeningly, all at once. The ethics are unknown: copyrights questionable, accuracy tainted.

Now, anyone can create AI art images. While this opens the Internet to funny content, the AI art world is changing so fast we have little time to distinguish its possibilities from its consequences.

Earlier this year, a machine-generated painting titled Work theatre d’ opera spatial won the Colorado State Fair Art Show, prompting controversy in the greater art world. Its creator, Jason M. Allen, saw no issue. Already, the distinction between human and AI art is blurring. In 2018, artist Edward Bellamy auctioned AI art to a museum for $432,000.

But for now, the train isn’t stopping. We are single-handedly watching the future shape, image by image. If the last months are anything to go by, the future will be a succession of ever-sharpening, ever-more-realistic images of Pikachu and Peter Griffin.

In the meantime, boot up DALL-E, imagine a prompt — and behold.